You’ve probably heard about chunking if you’ve spent even ten minutes trying to build a Retrieval-Augmented Generation (RAG) system. You know, breaking text into pieces so the model can “remember” it better later. Sounds simple, right? But here’s the catch: how you chunk makes or breaks the whole thing.

Let’s be honest, traditional chunking is… lazy. It chops text into equal parts, like cutting a loaf of bread into perfectly even slices, regardless of whether or not those slices actually make sense together. The approach doesn’t care where the meaning stops or starts paragraph midpoints, sentence breaks, context boundaries? Nope. It just follows the knife.

And then we wonder why the answers sound confused.

That’s where semantic chunking comes in. (Of course, there are other methods of chunking that far outweigh token-length-based ones, but here we’ll focus on semantic chunking.)

So, what’s semantic chunking?

Instead of slicing text by raw length (say, every 500 tokens), semantic chunking uses meaning to decide where to cut. It’s like having someone who actually reads the text and says, “Okay, this paragraph is talking about how LLMs retrieve knowledge, and this next one shifts to evaluation metrics let’s separate those.”

It groups sentences that belong together conceptually, not mechanically.

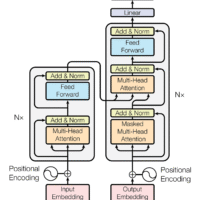

Under the hood, semantic chunking often uses embeddings; mathematical fingerprints of meaning. When two sentences have similar embeddings, they likely talk about the same thing. If they don’t, that’s your signal to start a new chunk.

Why it matters for RAG systems

In a RAG system, you’ve got two main jobs:

- Find the most relevant information (retrieval)

- Use it to generate a useful, accurate response (generation)

But retrieval only works if your chunks actually carry coherent meaning. If your chunks are chopped mid-thought, your retriever might pull in fragments that don’t tell the full story or worse, it might miss the right answer entirely because the context was broken up in the wrong place.

Imagine asking a question like:

“How does Tesla’s autopilot handle lane changes?”

If your text database has a chunk ending halfway through the explanation say it includes “Tesla’s autopilot initiates lane changes when…” but the rest is in another chunk your RAG system might not even grab the important part. You’d get something vague, like “Tesla’s autopilot assists drivers,” and miss the juicy details about the lane-change logic.

This issue becomes even more serious when dealing with legal or financial documents. In those domains, a misplaced sentence or a broken clause can change the entire meaning of a contract or a financial statement. If your RAG system chunks mid-definition or splits an important regulation from its conditions, the output could become not just inaccurate, but misleading or even non-compliant. Semantic chunking helps preserve the integrity of those tightly connected clauses, ensuring that retrieval respects the logical and legal boundaries that professionals depend on.

Semantic chunking fixes that. It keeps the “story” of an idea together, so the retriever can find the right context to feed into the model.

The payoff: theoretical benefits

Here’s the thing, there isn’t a clear consensus yet on whether semantic chunking always outperforms traditional approaches. Research like Qu et al., 2024 suggests the results can vary depending on the dataset and context. Some methods even change sentence order or use clustering to re-group meaning, which isn’t always the goal here.

So instead of quoting performance numbers, let’s think about why semantic chunking should help; in theory.

Semantic chunking aims to keep related ideas together, preserving the flow of meaning. When a model retrieves text, it can draw from conceptually complete sections rather than arbitrary slices. That means fewer gaps in reasoning and less chance of misinterpretation. For documents that naturally flow between multiple themes like financial reports or research papers; semantic chunking can help the retriever focus on sections that genuinely answer the question being asked.

In short, it’s about coherence. Not proven superiority, but potential for clarity and conceptual integrity.

How it actually works (without getting too nerdy)

Here’s a simple mental picture:

- Embedding step – Each sentence (or paragraph) is converted into a vector — a list of numbers that represent its meaning.

- Similarity check – Compare how close these vectors are to each other in “meaning space.”

- Boundary detection – When similarity drops below a certain threshold, that’s your natural “chunk break.”

- Chunk building – Group similar sentences together until the chunk reaches an optimal size (usually between 200–1000 tokens).

This way, you’re respecting both semantic flow and token limits.

Some implementations even go further, using sentence transformers, clustering algorithms, or dynamic windowing — but the principle’s the same: let meaning decide, not a ruler.

Bringing it home

Look, at the end of the day, building a good RAG system isn’t about having the biggest model or the flashiest prompt. It’s about feeding it the right information, in the right shape.

Semantic chunking is like giving your model clean, well-organized notes instead of random scribbles. It doesn’t have to “guess” what you meant — it already has context that flows naturally.

And that, honestly, changes everything.

Because once your chunks make sense, your retrieval gets sharper, your answers get clearer, and your users stop saying,

“That’s not what I asked…”

So yeah, semantic chunking might sound like a small tweak, but in the world of RAG, it’s the quiet revolution.

It’s what makes your system go from technically working to actually understanding.