Imagine you’re at a playground, and you see kids playing with marbles. Each marble represents a stock, and the kids trade marbles with each other. You want to join them and become the best marble trader. The playground represents the stock market, and your goal is to maximize your profit from trading marbles (stocks).

In this game, you can do three things at any moment: buy a marble, sell a marble, or do nothing (hold). To make the best decisions, you must think about the current situation (state) and how your actions might influence your profits.

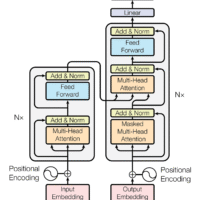

Now, let’s introduce the idea of a Markov Decision Process. Think of it as a game where each round is a new situation or state, and you have to choose the best action (buy, sell, or hold) based on what you know. In this game, the past doesn’t matter. All that matters is the current state and how you can maximize your rewards (profits) from now on.

To model stock trading as an MDP, we need to define three things:

- State: The state represents all the information you need to make decisions. In our marble game, it could be the number of marbles you have, the marbles’ colors, the prices at which they’re being traded, and other market conditions. In stock trading, the state can include stock prices, trading volume, technical indicators, and other relevant data.

- Actions: These are the decisions you can make in each state. In our marble game, you can buy, sell, or hold marbles. In stock trading, the actions are the same – you can buy, sell, or hold stocks.

- Rewards: The reward is the outcome you get after taking an action. In the marble game, it could be the profit you make from trading marbles. In stock trading, the reward is usually the profit or loss from your trading decisions.

The Markov part of the MDP means that the future depends only on the current state and your action, not on the past. This means that if you know the current state (market conditions, your portfolio, etc.) and the action you take, you can predict the next state and the associated reward.

In summary, modelling stock trading as a Markov Decision Process is like playing a game where you make decisions based on the current situation to maximize your rewards (profits). The MDP framework helps you simplify the problem by focusing on the current state and the actions you can take, without worrying about the past.